In the last two posts I introduced the AI Dev Gallery that Microsoft has been working on, and then demonstrated how code exported from the AI Dev Gallery can be added to an Uno Platform application. Unfortunately, whilst the code compiles on all the target platforms of the Uno Platform, the application fails to operate correctly on non-Windows platforms. In this post we’ll look at the reason why the application fails and how we can fix it.

Let’s start by trying to run the application on Android. The first issue we see is that the path to the Faster RCNN 10 model isn’t being resolved correctly; we can’t just assume a path relative to the InstalledLocation of the application. The OnNavigatedTo and InitModel methods can be updated as follows:

protected override async void OnNavigatedTo(Microsoft.UI.Xaml.Navigation.NavigationEventArgs e)

{

//await InitModel(System.IO.Path.Join(Windows.ApplicationModel.Package.Current.InstalledLocation.Path, "Models", @"FasterRCNN-10.onnx"));

await InitModel("Models/FasterRCNN-10.onnx");

}

private Task InitModel(string modelPath)

{

return Task.Run(async () =>

{

if (_inferenceSession != null)

{

return;

}

var file = await Windows.Storage.StorageFile.GetFileFromApplicationUriAsync(new Uri($"ms-appx:///{modelPath}"));

SessionOptions sessionOptions = new();

sessionOptions.RegisterOrtExtensions();

_inferenceSession = new InferenceSession(file.Path, sessionOptions);

});

}

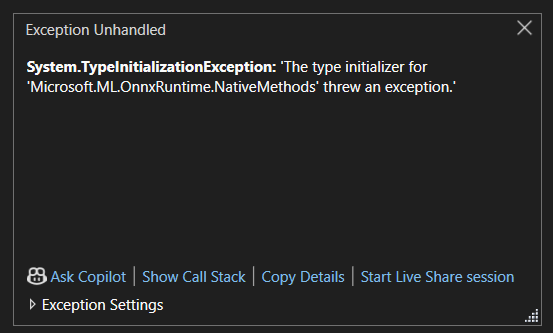

Now that we have the correct path to the model being passed into the InferenceSession constructor, we see a different issue emerge. A TypeInitializationException is raised when trying to initialize the NativeMethods type.

I realised that this was related to the current set of PackageReferences which includes Microsoft.ML.OnnxRuntime.DirectML. This package is an optimization of the OnnxRuntime that leverages DirectX 12, which would explain why it’s not compatible with Android (and other non-DirectX platforms). Let’s update the package references.

<ItemGroup>

<PackageReference Include="Microsoft.ML.OnnxRuntime" Condition="'$(TargetFramework)' != 'net8.0-windows10.0.26100'" />

<PackageReference Include="Microsoft.ML.OnnxRuntime.DirectML" Condition="'$(TargetFramework)' == 'net8.0-windows10.0.26100'" />

<PackageReference Include="Microsoft.ML.OnnxRuntime.Extensions" />

<PackageReference Include="System.Drawing.Common" />

</ItemGroup>

The Microsoft.ML.OnnxRuntime PackageReference will include a managed implementation of the OnnxRuntime. We’re conditionally including the DirectML implementation for Windows and the Microsoft.ML.OnnxRuntime package when not on Windows.

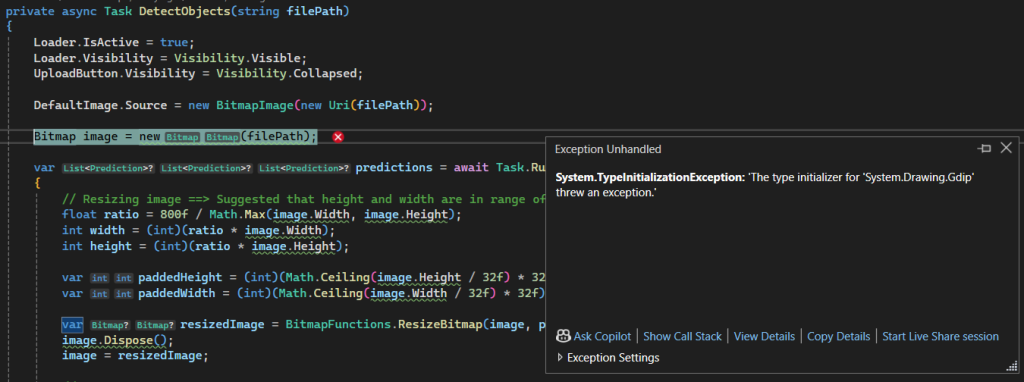

With these changes, the application starts up correctly. However, when we go to select an image, we experience further issues with any code that uses the System.Drawing.Common package.

I’m going to rant a little here because it’s annoying that Microsoft publishes a netstandard2.0 library that is only designed to work on Windows. This library should only include Windows tfms. Alternatively, if the intent is to allow the library to compile for all targets, the methods should be marked as NotImplemented for all targets except Windows.

Ok, so that ends my rant but the question remains, how do we replace System.Drawing.Common. A while ago I posted on using ImageSharp with the Uno Platform, so it makes sense to use this library. Let’s update the PackageReferences:

<ItemGroup>

<PackageReference Include="Microsoft.ML.OnnxRuntime" Condition="'$(TargetFramework)' != 'net8.0-windows10.0.26100'" />

<PackageReference Include="Microsoft.ML.OnnxRuntime.DirectML" Condition="'$(TargetFramework)' == 'net8.0-windows10.0.26100'" />

<PackageReference Include="Microsoft.ML.OnnxRuntime.Extensions" />

<!--<PackageReference Include="System.Drawing.Common" />-->

<PackageReference Include="SixLabors.ImageSharp" />

<PackageReference Include="SixLabors.ImageSharp.Drawing" />

</ItemGroup>

And now we need to replace the methods in BitmapFunctions.cs, which use methods in System.Drawing.Common, with equivalent methods that use ImageSharp. Not all methods in BitmapFunctions.cs are used by this application, so I’ve only migrated the two methods required into a static class, ImageFunctions.

using Microsoft.ML.OnnxRuntime.Tensors;

using Microsoft.UI.Xaml.Media.Imaging;

using SixLabors.Fonts;

using SixLabors.ImageSharp;

using SixLabors.ImageSharp.Drawing.Processing;

using SixLabors.ImageSharp.PixelFormats;

using SixLabors.ImageSharp.Processing;

namespace ObjectDetection;

internal static class ImageFunctions

{

private static readonly float[] Mean = [0.485f, 0.456f, 0.406f];

private static readonly float[] StdDev = [0.229f, 0.224f, 0.225f];

private static Uri FontUri = new Uri("ms-appx:///Uno.Fonts.OpenSans/Fonts/OpenSans.ttf");

public static DenseTensor<float> PreprocessBitmapForObjectDetection(Image<Rgb24> bitmap, int paddedHeight, int paddedWidth)

{

int width = bitmap.Width;

int height = bitmap.Height;

DenseTensor<float> input = new([3, paddedHeight, paddedWidth]);

bitmap.ProcessPixelRows(pixelAccessor =>

{

for (int y = 0; y < pixelAccessor.Height; y++)

{

Span<Rgb24> row = pixelAccessor.GetRowSpan(y);

// Using row.Length helps JIT to eliminate bounds checks when accessing row[x].

for (int x = 0; x < row.Length; x++)

{

input[0, y, x] = row[x].B - Mean[0];

input[1, y, x] = row[x].G - Mean[1];

input[2, y, x] = row[x].R - Mean[2];

}

}

});

return input;

}

public static async Task<BitmapImage> RenderPredictions(Image<Rgb24> image, List<Prediction> predictions)

{

FontCollection collection = new();

#if WINDOWS

collection.AddSystemFonts();

collection.TryGet("Arial", out var family);

#else

var fontFile = await Windows.Storage.StorageFile.GetFileFromApplicationUriAsync(FontUri);

collection.Add(fontFile.Path);

collection.TryGet("Open Sans", out var family);

#endif

image.Mutate(g =>

{

// Draw prediciton

int markerSize = (int)((image.Width + image.Height) * 0.04 / 2);

int fontSize = (int)((image.Width + image.Height) * .04 / 2);

fontSize = Math.Max(fontSize, 1);

Pen pen = new SolidPen(Color.Red, markerSize / 10);

var brush = new SolidBrush(Color.White);

Font font = family.CreateFont(fontSize);

foreach (var p in predictions)

{

if (p == null || p.Box == null)

{

continue;

}

// Draw the box

g.DrawLine(pen, new PointF(p.Box.Xmin, p.Box.Ymin), new PointF(p.Box.Xmax, p.Box.Ymin));

g.DrawLine(pen, new PointF(p.Box.Xmax, p.Box.Ymin), new PointF(p.Box.Xmax, p.Box.Ymax));

g.DrawLine(pen, new PointF(p.Box.Xmax, p.Box.Ymax), new PointF(p.Box.Xmin, p.Box.Ymax));

g.DrawLine(pen, new PointF(p.Box.Xmin, p.Box.Ymax), new PointF(p.Box.Xmin, p.Box.Ymin));

// Draw the label and confidence

string labelText = $"{p.Label}, {p.Confidence:0.00}";

g.DrawText(labelText, font, brush, new PointF(p.Box.Xmin, p.Box.Ymin));

}

});

// returns bitmap image

BitmapImage bitmapImage = new();

using (MemoryStream memoryStream = new())

{

image.Save(memoryStream, SixLabors.ImageSharp.Formats.Png.PngFormat.Instance);

memoryStream.Position = 0;

bitmapImage.SetSource(memoryStream.AsRandomAccessStream());

}

return bitmapImage;

}

}

Lastly, the code in MainPage.xaml.cs needs to be updated to use ImageSharp and ImageFunctions. Here I’ve included the UploadButton_Click method and the DetectObjects method which include the changes required to select an image, load an Image<Rgb24> and use it to prepare the image to run the object detection, and finally to load an updated Image<Rbg24> to display the results.

private async void UploadButton_Click(object sender, RoutedEventArgs e)

{

var window = App.MainWindow ?? new Window();

var hwnd = WinRT.Interop.WindowNative.GetWindowHandle(window);

var picker = new FileOpenPicker();

WinRT.Interop.InitializeWithWindow.Initialize(picker, hwnd);

picker.FileTypeFilter.Add(".png");

picker.FileTypeFilter.Add(".jpeg");

picker.FileTypeFilter.Add(".jpg");

picker.ViewMode = PickerViewMode.Thumbnail;

var file = await picker.PickSingleFileAsync();

if (file != null)

{

UploadButton.Focus(FocusState.Programmatic);

await DetectObjects(file);

}

}

private async Task DetectObjects(StorageFile file)

{

Loader.IsActive = true;

Loader.Visibility = Visibility.Visible;

UploadButton.Visibility = Visibility.Collapsed;

DefaultImage.Source = new BitmapImage(new Uri(file.Path));

Image<Rgb24>? image = default;

var predictions = await Task.Run(async () =>

{

using var strm = await file.OpenStreamForReadAsync();

image = Image<Rgb24>.Load<Rgb24>(strm);

// Resizing image ==> Suggested that height and width are in range of [800, 1333].

float ratio = 800f / Math.Max(image.Width, image.Height);

int width = (int)(ratio * image.Width);

int height = (int)(ratio * image.Height);

var paddedHeight = (int)(Math.Ceiling(image.Height / 32f) * 32f);

var paddedWidth = (int)(Math.Ceiling(image.Width / 32f) * 32f);

var resizedImage = image.Clone(ctx =>

{

ctx.Resize(new ResizeOptions

{

Size = new Size(paddedWidth, paddedHeight),

Mode = ResizeMode.Pad

});

});

image.Dispose();

image = resizedImage;

// Preprocessing

Tensor<float> input = new DenseTensor<float>([3, paddedHeight, paddedWidth]);

input = ImageFunctions.PreprocessBitmapForObjectDetection(image, paddedHeight, paddedWidth);

// Setup inputs and outputs

var inputMetadataName = _inferenceSession!.InputNames[0];

var inputs = new List<NamedOnnxValue>

{

NamedOnnxValue.CreateFromTensor(inputMetadataName, input)

};

// Run inference

using IDisposableReadOnlyCollection<DisposableNamedOnnxValue> results = _inferenceSession!.Run(inputs);

// Postprocess to get predictions

var resultsArray = results.ToArray();

float[] boxes = resultsArray[0].AsEnumerable<float>().ToArray();

long[] labels = resultsArray[1].AsEnumerable<long>().ToArray();

float[] confidences = resultsArray[2].AsEnumerable<float>().ToArray();

var predictions = new List<Prediction>();

var minConfidence = 0.7f;

for (int i = 0; i < boxes.Length - 4; i += 4)

{

var index = i / 4;

if (confidences[index] >= minConfidence)

{

predictions.Add(new Prediction

{

Box = new Box(boxes[i], boxes[i + 1], boxes[i + 2], boxes[i + 3]),

Label = RCNNLabelMap.Labels[labels[index]],

Confidence = confidences[index]

});

}

}

return predictions;

});

BitmapImage outputImage = await ImageFunctions.RenderPredictions(image!, predictions);

DispatcherQueue.TryEnqueue(() =>

{

DefaultImage.Source = outputImage;

Loader.IsActive = false;

Loader.Visibility = Visibility.Collapsed;

UploadButton.Visibility = Visibility.Visible;

});

image.Dispose();

}

Our application is good to go for Android. For some reason, I didn’t have much luck running this code on an Android emulator but it runs fine on an actual Android device. However, I will comment that the object detection isn’t particularly fast – there might be some room to optimize the image manipulation code.